20 Mrz, 2014 von Michael Müller 1

Interview: Dru Lavigne

This time the interview series continues with Dru Lavigne.

Dru is amgonst other things the Community Manager of the PC-BSD project, a director at the FreeBSD Foundation and the founder and president of the BSD Certification Group.

She is also a technical writer who has published books on topics surrounding BSD and writes the blog A Year in the Life of a BSD Guru.

Who are you and what do you do?

I currently work at iXsystems as the “Technical Documentation Specialist”. In reality, that means that I get paid to do what I love most, write about BSD. Lately, that means maintaining the documentation for the PC-BSD and the FreeNAS Projects, assisting the FreeBSD Documentation Project in preparing a two volume print edition of the FreeBSD Handbook, and writing a regular column for the upcoming FreeBSD Journal.

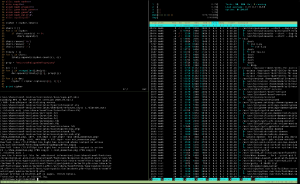

Which software or programs do you use most frequently?

At any given point in time, the following apps will be open on my PC-BSD system: Firefox, pidgin, kwrite, and several konsoles. Firefox has at least 20 tabs open, pidgin is logged into #pcbsd, #freenas, #bsdcert, and #bsddocs. make or igor (the FreeBSD automated doc proofreading utility) are most likely running in one konsole, while other konsoles have various man pages open or various commands which I’m testing. Other daily tools, depending upon which doc set I’m working on, include DocBook xml, OpenOffice, the Gimp, Calibre, Acroread, and VirtualBox for testing images. I also spend a fair amount of time in the forums, wikis, and bug tracking systems for the projects that I write documentation for.

Why did you decide to use your particular operating system(s) of choice?

I had been using FreeBSD as my primary desktop since 1996 and pretty much had an installation routine down pat to get everything I needed installed, up, and running as I needed new systems. When Kris Moore started the PC-BSD Project, I liked the graphical installer and its ability to setup everything I needed quickly. Sure, I could do it myself, but why waste an hour or so doing that when someone else had already created something to automate the process?

In what manner do you communicate online?

If it’s not in my inbox, it probably doesn’t happen. However, IRC, Facebook, and LinkedIn are convenient for getting an answer to something now before summarizing in an email or actioning an item.

Which folders can be found in your home directory?

A dir for temporary downloads and patches, one for PC-BSD src, one for FreeBSD doc src, one for presentations, one for articles, one for bsdcert stuff, one for each version of the PC-BSD docs, and one for each version of the FreeNAS docs.

Which paper or literature has had the most impact on you?

My favorite O’Reilly books are Unix Power Tools by Peek, Powers, et al, TCP/IP Network Administration by Craig Hunt, and Open Sources: Voices from the Open Source Revolution.

What has had the greatest positive influence on your efficiency?

Hard to say, as my brain tends to naturally gravitate towards the most efficient way of doing anything. I can’t imagine working without an Internet connection though and the times when Internet is not available are frustrating work-wise.

How do you approach the development of a new project?

Speaking from a doc project perspective, I tend to think big picture first and then lay out the details as they are tested then written. I can quickly visualize a flow, associated table of contents, and an estimate of the number of pages required, the rest is the actual writing.

Which programming language do you like working with most?

I don’t per se, but can work my way around a Makefile. With regards to text formatting languages, I’ve used LaTex, DocBook XML, PseudoPOD, groff, mediawiki, tikiwiki, ikiwiki, etc. I can’t say that I have a favorite text formatting language as it is just yet another way I have to remember to tag as when writing text. I do have to be careful to use the correct tags for the specified doc set and to avoid using tags or entities when writing emails or chats :-)

In your opinion, which piece of software should be rewritten from scratch?

No comment on software (as I’m not a developer). However, I daily see docs that need to be ripped out and started from scratch as they are either so out-of-date to be unusable or their flow doesn’t match how an user actually uses the software. That’s assuming that any docs for that software exist at all.

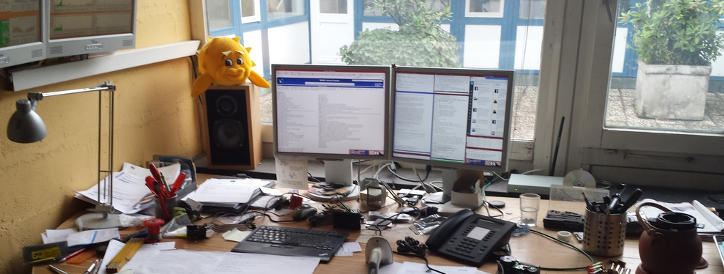

What would your ideal setup look like?

I like my current setup as it has all of the tools I need. See #2.

Click here for the full picture.